Hello all,

I have somewhat been lazy at writing tech articles recently, mostly because I am working on some very interesting private projects for now. Note that if you do not want to trouble yourself and you don’t care about implementing your own stuffs, you can just use cloudwatch. I wanted to write down a cheap alternative of how I used to do logging before, plus it had to be something that can be modified to be used inside our own infrastructure without any cloud approach as well. You may as well use filebeat and logstash.

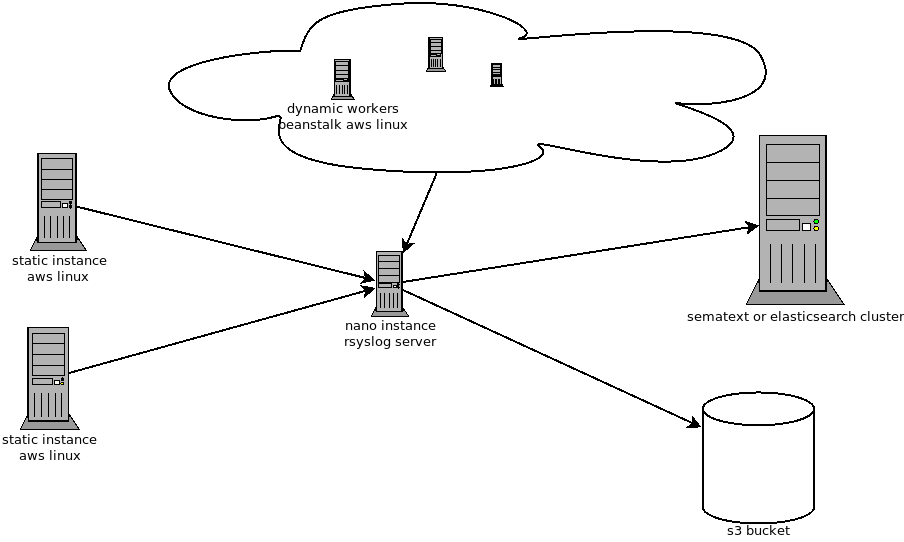

The following is a bit how my final result will be like:

A picture is worth a thousand words as they say, hence, here’s one for you:

To proceed, we will call the multiple static instance and dynamic workers as client.

Since we do not want to do this manually each time, and that beanstalk is already used to generate our instance, we could take advantage of the ebextensions that aws provides for such scenarios. Ofcourse, you can use ansible as well for this purpose.

So you will need to create one s3 bucket, named yours3-conf-bucket and allow the security groups of the machines involved here to have read access to it.

Note that I have used one central key to encrypt the communication between these machines, you can obviously use multiple keys for each machines and generate them on run time using the rsyslogconfig.sh configuration script file. Use aws server variables wisely here to implement multiple keys. But for the sake of simplicity, let’s proceed with one single static key setup. To generate keys you can find some examples here.

You will also need to add a dns entry to point to your log server, even if all the ip addresses concerned are private ips inside a vpc, for the readability, i made a dns entry to point to my log server. In this case, I did an entry rsyslog-internal.gouvernma.mu in my route53, you can obviously use something else.

ebextensions

In the packages section, you can see that I have set the different packages that I will need for this kind of setup. This is a yaml file, so be careful with tabs and spaces.

packages:

yum:

rsyslog: []

gnutls: []

gnutls-utils.x86_64: []

cronie: []

libzip: []

rsyslog-gnutls: []

commands:

01keys_directory:

command: mkdir -p /etc/rsyslog/keys/

commands:

02yum_clean:

command: yum clean all -y

commands:

03yum_update:

command: yum update -y

files:

"/etc/rsyslog.d/client-rsyslog.conf":

mode: "000644"

owner: root

group: root

authentication: "S3Auth"

source: "https://s3.eu-central-2.amazonaws.com/yours3-conf-bucket/client-rsyslog.conf"

"/etc/rsyslog/keys/ca.pem":

mode: "000644"

owner: root

group: root

authentication: "S3Auth"

source: "https://s3.eu-central-2.amazonaws.com/yours3-conf-bucket/ca.pem"

"/etc/rsyslog/keys/key.pem":

mode: "000644"

owner: root

group: root

authentication: "S3Auth"

source: "https://s3.eu-central-2.amazonaws.com/yours3-conf-bucket/rslclient-key.pem"

"/etc/rsyslog/keys/cert.pem":

mode: "000644"

owner: root

group: root

authentication: "S3Auth"

source: "https://s3.eu-central-2.amazonaws.com/yours3-conf-bucket/rslclient-cert.pem"

"/etc/yum.repos.d/rsyslog.repo":

mode: "000644"

owner: root

group: root

authentication: "S3Auth"

source: "https://s3.eu-central-2.amazonaws.com/yours3-conf-bucket/rsyslog.repo"

"/home/ec2-user/rsyslogconfig.sh":

mode: "000755"

owner: root

group: root

content: |

#!/bin/bash

if [ "${IS_TEST}" = "true" ]; then

sed -i 's/apache-access/apache-access-test/g' /etc/rsyslog.d/client-rsyslog.conf

sed -i 's/apache-error/apache-error-test/g' /etc/rsyslog.d/client-rsyslog.conf

sed -i 's/sqs-log/sqs-log-test/g' /etc/rsyslog.d/client-rsyslog.conf

fi

/sbin/service rsyslog restart

container_commands:

08applicationlog:

command: /bin/touch /var/log/gouvernma.log && /bin/chown webapp:webapp /var/log/gouvernma.log

09RWapplicationlog:

command: /bin/chmod 700 /var/log/gouvernma.log

10rsyslog_config:

command: /home/ec2-user/rsyslogconfig.sh

services:

sysvinit:

rsyslog:

enabled: true

Repo File

Since aws linux does not provide the latest rsyslog in its repo, I used the centos6 repos which are closer to it at the time of writing. Using the adiscon repo. You can read more about it here.

[v8-stable]

name=Adiscon CentOS-6 - local packages for \$basearch

baseurl=http://rpms.adiscon.com/v8-stable/epel-6/\$basearch

enabled=1

priority=10

gpgcheck=0

gpgkey=http://rpms.adiscon.com/RPM-GPG-KEY-Adiscon

protect=1

Rsyslog Client side configuration

As you can see, I am fetching a file called client-rsyslog.conf this file will actually be the one responsible to tell rsyslog how to send logging data to the rsyslog server. The beanstalk configuration will drop that configuration file there. Obviously, you can use ansible to do ths.

/etc/rsyslog.d/client-rsyslog.conf

$ModLoad imfile

$InputFilePollInterval 10

$PrivDropToGroup adm

$WorkDirectory /tmp

$DefaultNetstreamDriverCAFile /etc/rsyslog/keys/ca.pem

$DefaultNetstreamDriverCertFile /etc/rsyslog/keys/cert.pem

$DefaultNetstreamDriverKeyFile /etc/rsyslog/keys/key.pem

$DefaultNetstreamDriver gtls

$ActionSendStreamDriverMode 1

$ActionSendStreamDriverAuthMode anon

# SQS Log

$InputFileName /var/log/aws-sqsd/default.log

$InputFileTag sqs-log:

$InputFileStateFile stat-sqs-log

$InputFileSeverity error

$InputFilePersistStateInterval 10000

$InputRunFileMonitor

#any custom logs you have

# gouvernma Application Log

$InputFileName /var/log/gouvernma.log

$InputFileTag pc-application-log:

$InputFileStateFile stat-pc-application-log

$InputFileSeverity info

$InputFilePersistStateInterval 10000

$InputRunFileMonitor

# Apache access file:

$InputFileName /var/log/httpd/access_log

$InputFileTag apache-access:

$InputFileStateFile stat-apache-access

$InputFileSeverity info

$InputFilePersistStateInterval 10000

$InputRunFileMonitor

#Apache Error file:

$InputFileName /var/log/httpd/error_log

$InputFileTag apache-error:

$InputFileStateFile stat-apache-error

$InputFileSeverity error

$InputFilePersistStateInterval 10000

$InputRunFileMonitor

if $programname == 'apache-access' then @@rsyslog-internal.gouvernma.mu:514

if $programname == 'apache-error' then @@rsyslog-internal.gouvernma.mu:514

if $programname == 'pc-application-log' then @@rsyslog-internal.gouvernma.mu:514

if $programname == 'sqs-log' then @@rsyslog-internal.gouvernma.mu:514

if $programname == 'mem-cpu-log' then @@rsyslog-internal.gouvernma.mu:514

Part of my rsyslog.conf

module(load="imuxsock") # provides support for local system logging (e.g. via logger command)

module(load="imklog") # provides kernel logging support (previously done by rklogd)

# Use default timestamp format

$ActionFileDefaultTemplate RSYSLOG_TraditionalFileFormat

# Include all config files in /etc/rsyslog.d/

$IncludeConfig /etc/rsyslog.d/*.conf

How about the Server configuration now

Now, we will need something on the server to not only allow communications form only those machines, but also accept only encrypted communication using those keys.

Not only that, but we also need to tell rsyslog to format our log into JSON so as we can proceed to the next step with logstash.

You will notice that to make the json template files, a lot of regular expressions were used, don’t be afraid, it is pretty fun once to get the hang of it, but remember Rsyslog uses POSIX ERE (and optionally BRE) expressions, so you need to be quite creative writing them out.

so here we go with our server /etc/rsyslog.conf

# Provides UDP syslog reception

$DefaultNetstreamDriver gtls

$DefaultNetstreamDriverCAFile /etc/rsyslog/keys/ca.pem

$DefaultNetstreamDriverCertFile /etc/rsyslog/keys/cert.pem

$DefaultNetstreamDriverKeyFile /etc/rsyslog/keys/key.pem

$ModLoad imtcp

$InputTCPServerStreamDriverAuthMode x509/name

$InputTCPServerStreamDriverPermittedPeer *.xxxx.gouvernma.mu #host you want to be allowed in

$InputTCPServerStreamDriverMode 1 # run driver in TLS-only mode

#$ModLoad imudp

#$UDPServerRun 514 #chose your port

# Provides TCP syslog reception

$InputTCPServerRun 514

$IncludeConfig /etc/rsyslog.d/*.conf

Now, we also need to parse those log files and convert them to json, that’s what our config in /etc/rsyslog.d/* are for 🙂

01-access-template-json.conf

This will be used to convert our apache access logs to json format

template(name="access-json-template"

type="list") {

constant(value="{")

constant(value="\"@timestamp\":\"") property(format="json" name="timereported" dateFormat="rfc3339")

constant(value="\",\"message\":\"") property(format="json" name="msg")

constant(value="\",\"sysloghost\":\"") property(format="json" name="hostname")

constant(value="\",\"severity\":\"") property(format="json" name="syslogseverity-text")

constant(value="\",\"facility\":\"") property(format="json"name="syslogfacility-text")

constant(value="\",\"programname\":\"") property(format="json" name="programname")

constant(value="\",\"client_ip\":\"") property(format="json" name="msg" regex.type="ERE" regex.submatch="2" regex.nomatchmode="FIELD" regex.expression="(\\()([^\\)]+)")

constant(value="\",\"endpoint\":\"") property(format="json" name="msg" regex.type="ERE" regex.submatch="4" regex.nomatchmode="FIELD" regex.expression="(\\[(.*)\\])[^(GET|PUT|POST|DELETE|PATCH)]+(GET|PUT|POST|DELETE|PATCH)(.*)(HTTP)")

constant(value="\",\"app-date\":\"") property(format="json" name="msg" regex.type="ERE" regex.submatch="2" regex.nomatchmode="FIELD" regex.expression="(\\[([^ ]+))")

constant(value="\",\"method\":\"") property(format="json" name="msg" regex.type="ERE" regex.submatch="3" regex.nomatchmode="FIELD" regex.expression="(\\[(.*)\\])[^(GET|PUT|POST|DELETE|PATCH)]+(GET|PUT|POST|DELETE|PATCH)(.*)(HTTP)")

constant(value="\",\"log\":\"") property(format="json" name="msg" regex.type="ERE" regex.submatch="3" regex.nomatchmode="FIELD" regex.expression="(\\[(.*)\\])(.*)")

constant(value="\",\"response\":\"") property(format="json" name="msg" regex.type="ERE" regex.submatch="2" regex.nomatchmode="FIELD" regex.expression="(HTTP\\/1\\.1)\" ([0-9]+) (([0-9]+)|(-)) \"")

constant(value="\",\"procid\":\"") property(format="json" name="procid")

constant(value="\"}\n")

}

02-error-json-template

This will convert our apache error log file to json format.

template(name="error-json-template"

type="list") {

constant(value="{")

constant(value="\"@timestamp\":\"") property(format="json" name="timereported" dateFormat="rfc3339")

constant(value="\",\"@version\":\"1")

constant(value="\",\"message\":\"") property(name="msg" format="json")

constant(value="\",\"sysloghost\":\"") property(format="json" name="hostname")

constant(value="\",\"severity\":\"") property(format="json" name="syslogseverity-text")

constant(value="\",\"facility\":\"") property(format="json" name="syslogfacility-text")

constant(value="\",\"programname\":\"") property(format="json" name="programname")

constant(value="\",\"status\":\"") property(format="json" name="msg" regex.type="ERE" regex.submatch="2" regex.nomatchmode="FIELD" regex.expression="(\\[\\:)([^ ]+)(\\])")

constant(value="\",\"pid\":\"") property(format="json" name="msg" regex.type="ERE" regex.submatch="2" regex.nomatchmode="FIELD" regex.expression="(\\[pid) ([^ ]+)(\\])")

constant(value="\",\"client_ip\":\"") property(format="json" name="msg" regex.type="ERE" regex.submatch="2" regex.nomatchmode="FIELD" regex.expression="(\\[client) ([^ ]+)(\\])")

constant(value="\",\"app-date\":\"") property(format="json" name="msg" regex.type="ERE" regex.submatch="1" regex.nomatchmode="FIELD" regex.expression="([A-Z]{1}[a-z]{2} [A-Z]{1}[a-z]{2} [0-9]{2} [0-9]{2}\\:[0-9]{2}\\:[0-9]{2}\\.[0-9]{6} [0-9]{4})")

constant(value="\",\"error_class\":\"") property(format="json" name="msg" regex.type="ERE" regex.submatch="4" regex.nomatchmode="FIELD" regex.expression="(\\[client) ([^ ]+)(\\]) ([^:]+)")

constant(value="\",\"log\":\"") property(format="json" name="msg" regex.type="ERE" regex.submatch="4" regex.nomatchmode="FIELD" regex.expression="(\\[client) ([^ ]+)(\\]) (.*)")

constant(value="\",\"procid\":\"") property(format="json" name="procid")

constant(value="\"}\n")

}

03-gouvernma-json-template

This will be used to convert my custom application logs format into json format. Yes I could have generated it in json when i create it then, but this article is to show how how rsyslog can be used to achieve this.

template(name="gouvernma-json-template"

type="list") {

constant(value="{")

constant(value="\"@timestamp\":\"") property(format="json" name="timereported" dateFormat="rfc3339")

constant(value="\",\"@version\":\"1")

constant(value="\",\"message\":\"") property(name="msg" format="json")

constant(value="\",\"sysloghost\":\"") property(format="json" name="hostname")

constant(value="\",\"facility\":\"") property(format="json" name="syslogfacility-text")

constant(value="\",\"programname\":\"") property(format="json" name="programname")

constant(value="\",\"app-date\":\"") property(format="json" name="msg" regex.type="ERE" regex.submatch="1" regex.nomatchmode="FIELD" regex.expression="([0-9]{4}-[0-9]{2}-[0-9]{2}T[0-9]{2}:[0-9]{2}:[0-9]{2})")

constant(value="\",\"severity\":\"") property(format="json" name="msg" regex.type="ERE" regex.submatch="1" regex.nomatchmode="FIELD" regex.expression="(INFO|ERR|EMERG|NOTICE|WARN|DEBUG|ERR)")

constant(value="\",\"log\":\"") property(format="json" name="msg" regex.type="ERE" regex.submatch="5" regex.nomatchmode="FIELD" regex.expression="(((INFO|ERR|EMERG|NOTICE|WARN|DEBUG|ERR) \\(([0-9]+)\\):))(.*)")

constant(value="\"}\n")

}

04-sqs-json-template.conf

This one will convert the aws sqs logs into json

template(name="sqs-json-template"

type="list") {

constant(value="{")

constant(value="\"@timestamp\":\"") property(name="timereported" dateFormat="rfc3339")

constant(value="\",\"@version\":\"1")

constant(value="\",\"message\":\"") property(name="msg" format="json")

constant(value="\",\"sysloghost\":\"") property(name="hostname")

constant(value="\",\"severity\":\"") property(name="msg" regex.type="ERE" regex.submatch="0" regex.nomatchmode="FIELD" regex.expression="(http-err|message)")

constant(value="\",\"facility\":\"") property(name="syslogfacility-text")

constant(value="\",\"programname\":\"") property(name="programname")

constant(value="\",\"log\":\"") property(name="msg" regex.type="ERE" regex.submatch="3" regex.nomatchmode="FIELD" regex.expression="(http-err|message)(: )(.*)")

constant(value="\"}\n")

}

Finally the main file,06-output.conf

This is my main file which directs which log entry to its correct template file and it will also send the json formatted entries to logstash

# This line sends all lines to defined IP address at port 10514,

# using the "json-template" format template

if $programname == 'apache-access' then {

@@localhost:10514;access-json-template

}

else if $programname == 'apache-error' then {

@@localhost:10514;error-json-template

}

else if $programname == 'pc-application-log' then {

@@localhost:10514;gouvernma-json-template

}

else if $programname == 'sqs-log' then {

@@localhost:10514;sqs-json-template

}

$template TmplAuth, "/var/log/%HOSTNAME%/%PROGRAMNAME%.log"

*.* ?TmplAuth

#*.info,mail.none,authpriv.none,cron.none ?TmplMsg

Notice how I have referenced each by their template name, and how depending on which log is coming in, I am pushing it to its proper template generation file.

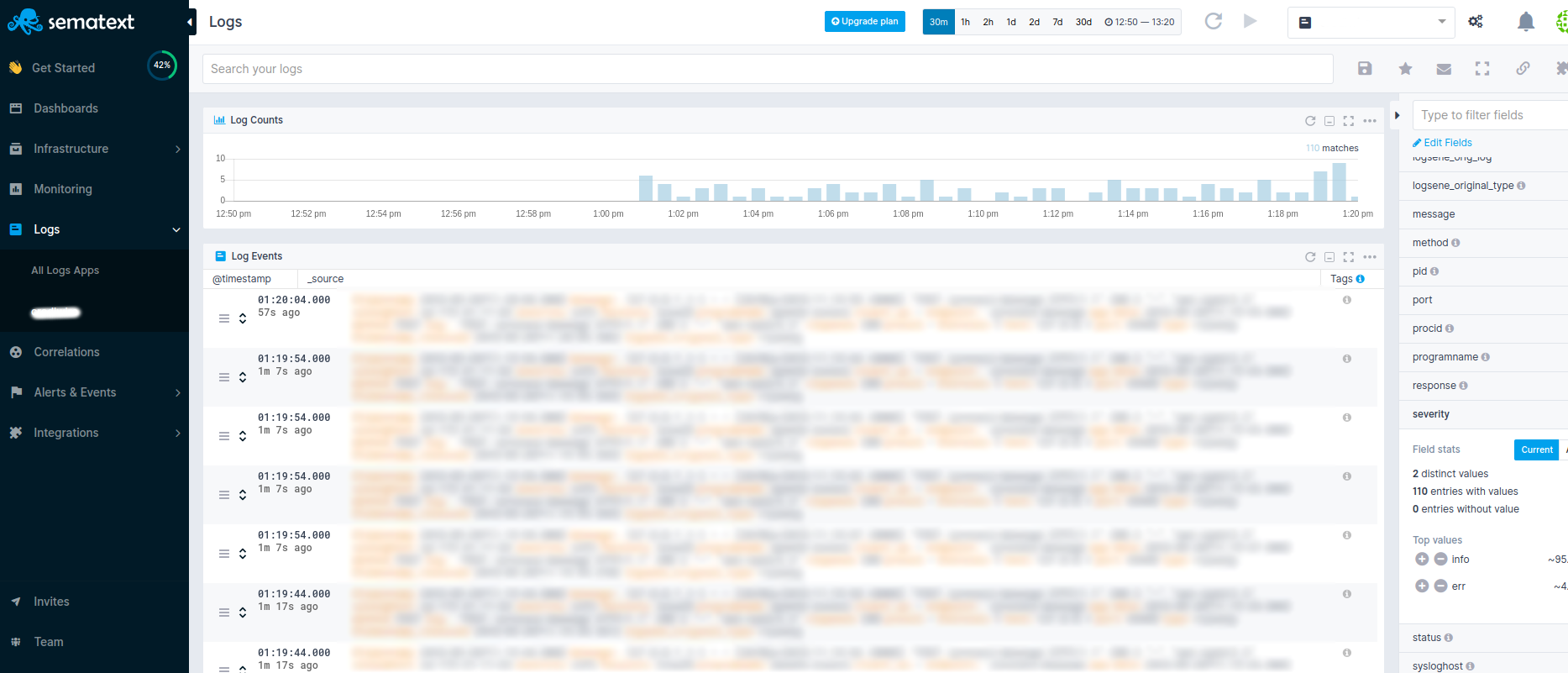

Logstash

From here on, you can redirect the logs to anywhere you want. I have Logstash running and is using it to send logs to sematext or a simple elasticsearch cluster. Logstash is an open source, server-side data processing pipeline that ingests data from a multitude of sources simultaneously, transforms it, and then sends it to your favorite “stash.” You can do a lot of further magic with logstash, but that will be beyond the scope of this article. I invite you to read logstash documentation.

Watch this video about logstash

/etc/logstash/conf.d//filter.conf

filter {

if ([message] =~ /\(Amazon\) \(internal dummy connection\)/) {

drop{}

}

if([app-date] =~ /[0-9]{2}\/[A-Z]{1}[a-z]{2}\/[0-9]{4}:[0-9]{2}:[0-9]{2}:[0-9]{2}/) {

date{

match => ["app-date", "dd/MMM/yyyy:HH:mm:ss"]

target =>"app-date"

}

}

if([app-date] =~ /([A-Z]{1}[a-z]{2} [A-Z]{1}[a-z]{2} [0-9]{2} [0-9]{2}\:[0-9]{2}\:[0-9]{2}\.[0-9]{6} [0-9]{4})/) {

date{

match => ["app-date", "EEE MMM dd HH:mm:ss.SSSSSS yyyy"]

target =>"app-date"

}

}

}

/etc/logstash/conf.d//input.conf

input {

tcp {

host => "localhost"

port => "10514"

type => "rsyslog"

codec => "json"

}

}

/etc/logstash/conf.d/output.conf

This will move our logs to sematext, you can choose elastic search here if you want.

output {

file{

path => "/var/log/logstash/myjsonoutput.log"

}

elasticsearch {

hosts => ["logsene-receiver.sematext.com:443"]

ssl => "true"

#use a valid token here

index => "xxxxxxxx-xxxxxx-xxxxxx-xxxxxx-xxxxxxxxx"

manage_template => "false"

}

}

You can now set up logrotate to clear up old logs, and I added a small scripts to cron it all, backup and compress everything old and upload it to s3 as well using awscli. I won’t go into the details of it, but moving the files to s3 can easily be achieved using aws cli as follows:

aws --region eu-central-2 s3 cp ${main_log_dir}.tar.bz2 s3://gouvernma-logs/

I hoped you enjoyed this article and this could be of some help to you. There are several ways of achieving this. Rsyslog is a nice project, and I think it can has some nice feature to give.

The above can be done using in house setup without any cloud usage as well. You can improve a lot on this if you decide to be really creative with this.

Till then, happy hacking!

Pirabarlen/Selven/PC_THE_GREAT

Founder of hackers.mu